Kappa Coefficient:

The Kappa Coefficient Calculator is a valuable tool used in various fields, including statistics, inter-rater reliability studies, and research methodologies. This calculator helps determine the agreement between two or more raters beyond what would be expected by chance. Essentially, it measures the level of agreement between observers or raters when evaluating categorical items.

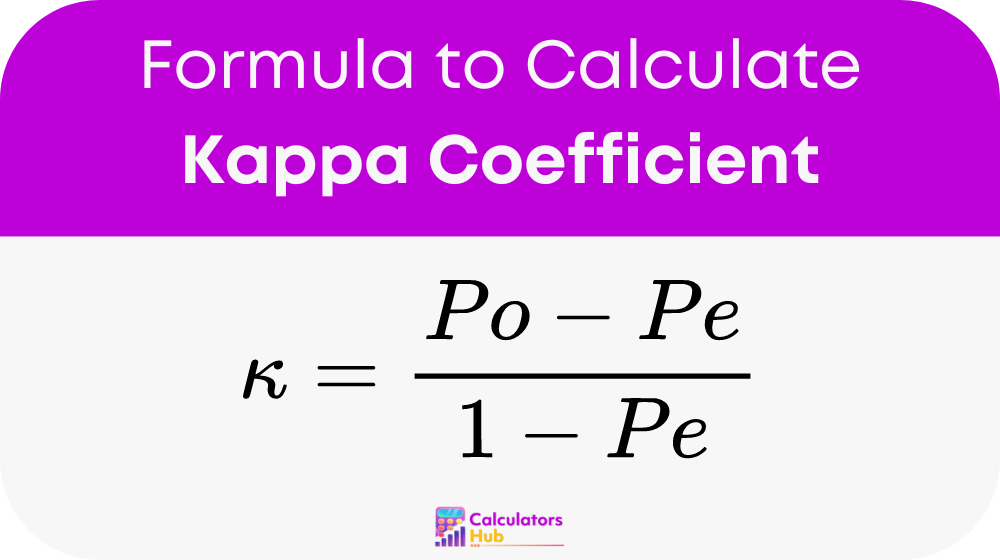

Formula of Kappa Coefficient Calculator

The Kappa Coefficient (κ) is calculate using the following formula:

Where:

- Po: Represents the relative observed agreement among raters.

- Pe: Denotes the hypothetical probability of chance agreement.

To calculate Po:

- Determine the proportion of observed agreement among raters.

To calculate Pe:

- Calculate the expected agreement by chance, based on the marginal probabilities of the categories of each rater.

Once you have values for Po and Pe, plug them into the formula to find Cohen’s kappa.

Table of General Terms

| Observed Agreement (Po) | Expected Agreement (Pe) | Kappa Coefficient (κ) |

|---|---|---|

| 0.1 | 0.1 | 0.000 |

| 0.2 | 0.1 | 0.167 |

| 0.3 | 0.1 | 0.333 |

| 0.4 | 0.1 | 0.500 |

| 0.5 | 0.1 | 0.667 |

| 0.6 | 0.1 | 0.800 |

| 0.7 | 0.1 | 0.857 |

| 0.8 | 0.1 | 0.889 |

| 0.9 | 0.1 | 0.909 |

| 0.1 | 0.2 | -0.111 |

| 0.2 | 0.2 | 0.000 |

| 0.3 | 0.2 | 0.125 |

| 0.4 | 0.2 | 0.250 |

| 0.5 | 0.2 | 0.375 |

| 0.6 | 0.2 | 0.500 |

| 0.7 | 0.2 | 0.571 |

| 0.8 | 0.2 | 0.600 |

| 0.9 | 0.2 | 0.625 |

Example of Kappa Coefficient Calculator

Dr. Smith and Dr. Johnson independently diagnose patients for a disease. Out of 100 patients, they agree on 80 diagnoses.

- Observed Agreement (Po): 80/100 = 0.8

- Expected Agreement (Pe): Assuming chance agreement based on disease prevalence, let’s say 30/100 = 0.3

Using the Kappa Coefficient formula: κ = (0.8 – 0.3) / (1 – 0.3) ≈ 0.7143

Result: The Kappa Coefficient (κ) indicates a substantial level of agreement between the two doctors beyond chance, suggesting reliable diagnostic consistency.

Most Common FAQs

The Kappa Coefficient measures the level of agreement between two or more raters beyond what would be expected by chance alone. It is commonly use in inter-rater reliability studies to assess the consistency of categorical judgments or measurements.

The interpretation of the Kappa Coefficient varies depending on its value. A value of 1 indicates perfect agreement, 0 indicates agreement equivalent to chance, and negative values suggest agreement worse than chance. Generally, values above 0.75 indicate excellent agreement, while values below 0.4 suggest poor agreement.

Several factors can influence the Kappa Coefficient, including the complexity of the rating task, the number of raters, and the prevalence of categories being rate. Additionally, discrepancies in rater training or understanding of rating criteria can affect agreement levels.