The Beta Error Calculator is a statistical tool used to estimate the probability of making a Type II error in hypothesis testing. A Type II error occurs when a statistical test fails to reject a false null hypothesis. In simpler terms, it is the error of concluding that there is no effect or difference when, in fact, there is one. This type of error is denoted by the Greek letter β (beta). The Beta Error Calculator helps researchers and analysts determine this probability, which is crucial for understanding the reliability of their tests and making informed decisions based on the results.

Understanding the likelihood of a Type II error is important in fields such as medical research, economics, and social sciences, where making incorrect conclusions can have significant consequences. The Beta Error Calculator provides insights into the test’s statistical power, guiding researchers on whether their sample size is sufficient or if they need to adjust their study design.

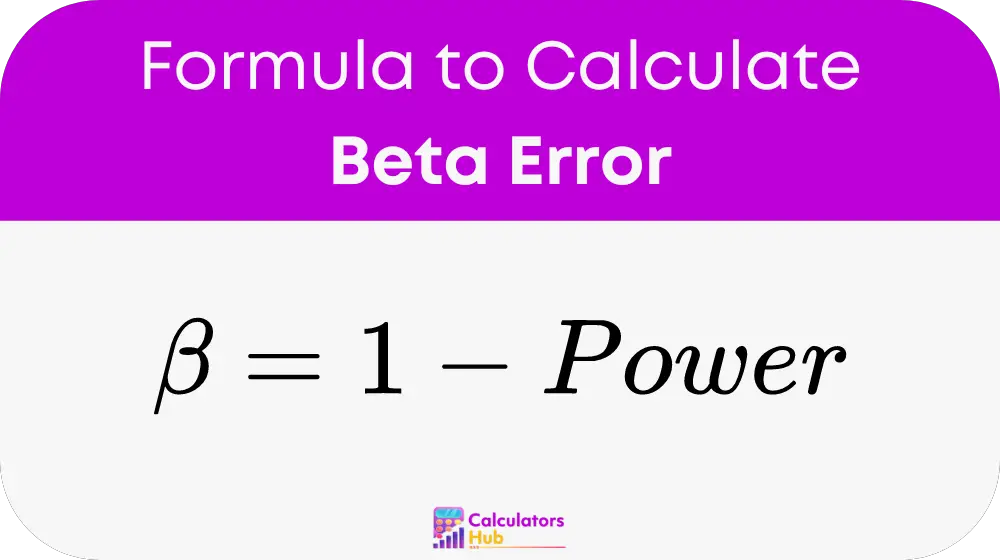

Beta Error Calculation Formula

The probability of making a Type II error (β) depends on various factors, including the effect size, sample size, significance level (α), and the population’s standard deviation. The formula for β is typically derived through the concept of statistical power, which is the probability of correctly rejecting a false null hypothesis. The relationship between β and power is:

To calculate β directly, the following steps are usually involved:

- Define the Null Hypothesis (H0) and Alternative Hypothesis (H1):

- The null hypothesis (H0) typically represents no effect or no difference, while the alternative hypothesis (H1) represents the effect or difference the researcher aims to detect.

- Specify the Effect Size:

- The effect size is the magnitude of the difference between the hypothesized population parameter and the true population parameter under H1.

- Determine the Standard Deviation (σ) or Standard Error (SE):

- The standard deviation (σ) is either known or estimated from sample data.

- The standard error (SE) is calculated as σ / sqrt(n), where n is the sample size.

- Set the Significance Level (α):

- This is the probability of making a Type I error (false positive). Common choices for α are 0.05, 0.01, etc.

- Calculate the Critical Value (Zα):

- The critical value associated with the significance level α is determined using the standard normal distribution.

- Calculate the Non-Centrality Parameter (δ):

- δ = (Effect Size) / SE

- The effect size is the magnitude of the difference you want to detect.

- Determine the Power (1 – β):

- The power is computed using statistical software or power tables by integrating the non-central distribution.

- Calculate β:

- β = 1 – Power

This step-by-step approach allows researchers to estimate the likelihood of a Type II error and understand the robustness of their hypothesis tests.

Common Terms and Conversion Table

To facilitate the understanding of Beta Error Calculation, here is a table of common terms and conversions that are frequently use in statistical analysis.

| Term | Definition |

|---|---|

| Beta Error (β) | Probability of failing to reject a false null hypothesis (Type II error) |

| Statistical Power | Probability of correctly rejecting a false null hypothesis, calculated as 1 – β |

| Effect Size | The magnitude of the difference between the null and alternative hypotheses |

| Standard Deviation (σ) | Measure of the amount of variation or dispersion in a set of values |

| Standard Error (SE) | Estimate of the standard deviation of the sampling distribution, calculated as σ / sqrt(n) |

| Significance Level (α) | Probability of rejecting a true null hypothesis (Type I error) |

| Critical Value (Zα) | Value that separates the region of rejection from the region of non-rejection in hypothesis testing |

| Non-Centrality Parameter (δ) | Measure of the degree of non-centrality in a statistical distribution |

This table serves as a useful reference for those using the Beta Error Calculator, ensuring accurate calculations and a clearer understanding of statistical concepts.

Example of Beta Error Calculator

Let’s walk through a practical example to demonstrate how the Beta Error Calculator works.

Assume a researcher is conducting a study to determine whether a new drug is more effective than a placebo. The null hypothesis (H0) is that there is no difference in effectiveness, while the alternative hypothesis (H1) is that the new drug is more effective. The researcher uses the following data:

- Effect Size: 0.5 (difference in mean effectiveness)

- Standard Deviation (σ): 1.2

- Sample Size (n): 100

- Significance Level (α): 0.05

Steps:

- Calculate the Standard Error (SE):

- SE = σ / sqrt(n) = 1.2 / sqrt(100) = 0.12

- Calculate the Non-Centrality Parameter (δ):

- δ = Effect Size / SE = 0.5 / 0.12 ≈ 4.17

- Determine the Critical Value (Zα) for α = 0.05:

- For a one-tailed test at α = 0.05, Zα ≈ 1.645

- Calculate the Power (1 – β):

- Power can be calculate using statistical software or power tables. Assume the power is find to be 0.80 (80%).

- Calculate β:

- β = 1 – Power = 1 – 0.80 = 0.20

In this example, the probability of making a Type II error (β) is 0.20, meaning there is a 20% chance that the test will fail to detect the drug’s effectiveness if it is indeed effective.

Most Common FAQs

Type I error (α) occurs when a true null hypothesis is incorrectly reject, while Type II error (β) occurs when a false null hypothesis is not reject. Type I errors are false positives, and Type II errors are false negatives.

To reduce the probability of a Type II error, you can increase the sample size, choose a larger effect size, or increase the significance level (α). This will increase the statistical power of your test.

Calculating β is important because it helps you understand the likelihood of failing to detect a true effect. Knowing β allows you to assess the reliability of your hypothesis test and make informed decisions about study design and sample size.