The Cohen’s Kappa Coefficient Calculator is a statistical tool used to measure the level of agreement between two raters or observers while accounting for the possibility of agreement occurring by chance. It is particularly useful in scenarios where subjective judgments are made, such as in psychological assessments, content classification, or medical diagnostics. Unlike a simple percentage agreement, Cohen’s Kappa adjusts for random chance, providing a more accurate measure of inter-rater reliability. This calculator belongs to the category of statistical reliability calculators, aiding researchers, statisticians, and professionals in evaluating the consistency and validity of categorical data.

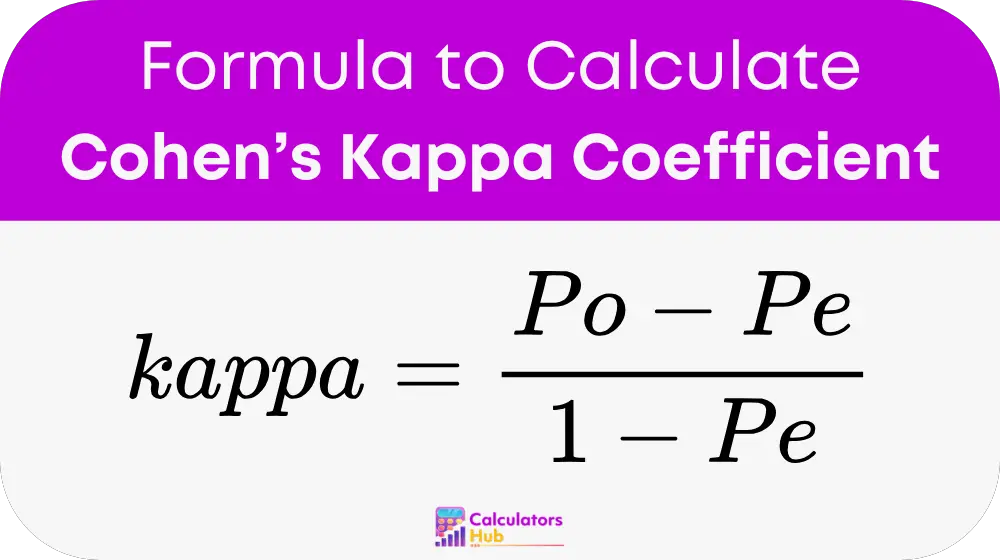

Formula of Cohen’s Kappa Coefficient Calculator

Cohen’s Kappa is calculated using the formula:

Where:

- Po is the observed proportion of agreement between the raters.

- Pe is the expected proportion of agreement by chance.

Detailed Formulas:

Observed Agreement (Po):

Po = Number of Agreements / Total Observations

Expected Agreement (Pe):

Pe = Σ (PAi × PBi)

Where:

- PAi is the proportion of times Rater A used category i, calculated as: Number of times Rater A used category i / Total Observations.

- PBi is the proportion of times Rater B used category i, calculated similarly.

These calculations ensure that the result accounts for chance agreement, providing a robust metric for reliability.

Pre-Calculated Table for Cohen’s Kappa Scenarios

Below is a helpful table summarizing pre-calculated Cohen’s Kappa values for commonly encountered scenarios, making it easier to interpret the results without manual computation:

| Observed Agreement (Po) | Expected Agreement (Pe) | Cohen’s Kappa (κ) | Interpretation |

|---|---|---|---|

| 0.90 | 0.50 | 0.80 | Almost perfect agreement |

| 0.75 | 0.25 | 0.67 | Substantial agreement |

| 0.60 | 0.30 | 0.43 | Moderate agreement |

| 0.50 | 0.40 | 0.17 | Slight agreement |

| 0.30 | 0.20 | 0.13 | Slight agreement |

| 0.20 | 0.10 | 0.11 | Poor agreement |

This table can guide users to quickly understand the reliability levels without performing detailed calculations.

Example of Cohen’s Kappa Coefficient Calculator

Let’s consider an example. Suppose two medical raters evaluate the presence of a disease in 100 patients. Their results are:

- Rater A: Disease detected in 60 patients, absent in 40.

- Rater B: Disease detected in 55 patients, absent in 45.

Of the 100 patients, both raters agreed on 80 (45 disease present, 35 absent).

Step 1: Calculate Observed Agreement (Po)

Po = Number of Agreements / Total Observations

Po = 80 / 100 = 0.80

Step 2: Calculate Expected Agreement (Pe)

The proportion for each rater in each category:

- PA (Disease present) = 60/100 = 0.60, PA (Disease absent) = 40/100 = 0.40

- PB (Disease present) = 55/100 = 0.55, PB (Disease absent) = 45/100 = 0.45

Pe = (0.60 × 0.55) + (0.40 × 0.45) = 0.33 + 0.18 = 0.51

Step 3: Calculate Kappa

Kappa = (Po - Pe) / (1 - Pe)

Kappa = (0.80 - 0.51) / (1 - 0.51) = 0.29 / 0.49 ≈ 0.59

This kappa value indicates moderate agreement between the raters.

Most Common FAQs

A kappa value of 0.60 to 0.80 indicates substantial agreement, while values above 0.80 represent almost perfect agreement. Values below 0.20 suggest poor agreement.

Cohen’s Kappa accounts for the possibility of agreement occurring by chance, making it a more reliable measure than simple percentage agreement.

No, Cohen’s Kappa is design for two raters. For more than two raters, other measures like Fleiss’ Kappa are use.