The Beta Variance Calculator is a statistical tool used to compute the variance of the Beta coefficients in a regression model. Understanding the variance of Beta coefficients is crucial in determining the reliability and precision of the estimated parameters in a regression analysis. This tool helps researchers, data analysts, and statisticians assess the stability of their models and make informed decisions based on the calculated variances. By providing a clear measure of how much a Beta coefficient is expected to vary, the Beta Variance Calculator aids in interpreting the strength and significance of predictor variables within a model.

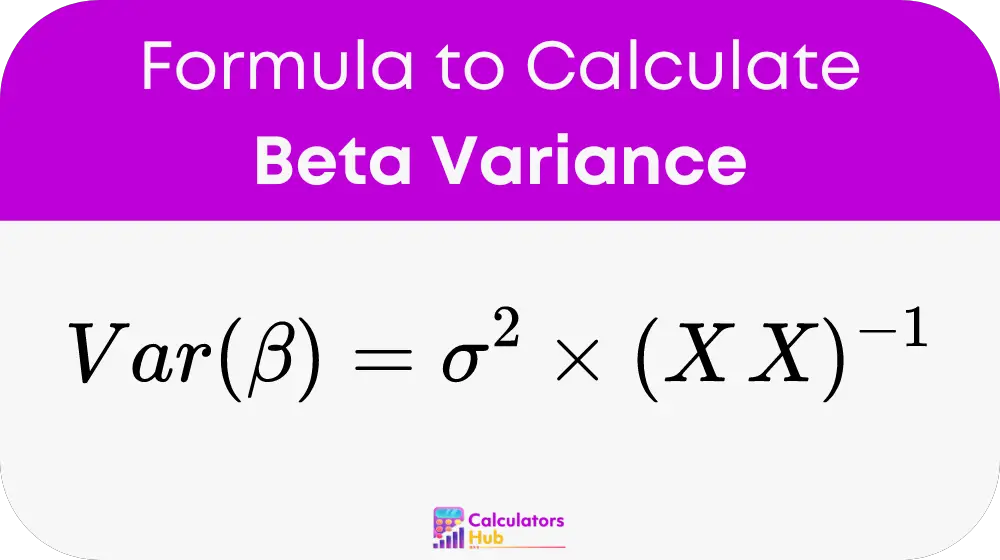

Formula of Beta Variance Calculator

Step 1: Gather the Required Values

To calculate the variance of the Beta coefficient, you need the following values:

- σ² (Variance of the Error Term): This represents the variance of the error term, also known as residual variance, in the regression model.

- X'X (Transpose of the Predictor Matrix): This is the transpose of the matrix of predictor variables multiplied by the matrix of predictor variables.

- (X'X)⁻¹ (Inverse of X'X): This represents the inverse of the matrix X'X.

Step 2: Calculate the Variance of the Beta Coefficient

You can calculate the variance of the Beta coefficient using the following formula:

This formula accounts for the variance of the error term and the structure of the predictor variables to provide the variance of the Beta coefficients. The result indicates the extent to which the Beta coefficients might vary due to the inherent variability in the data and the predictors' relationship.

General Terms Table

Here is a table that provides definitions for key terms related to the Beta Variance Calculator. This table will help users understand the concepts and apply them more effectively:

| Term | Description |

|---|---|

| Beta Coefficient (β) | A measure of the effect size of a predictor variable in a regression model. |

| Variance (σ²) | A measure of the dispersion of the error term in the regression model. |

| Predictor Variable Matrix (X) | A matrix containing the values of the independent variables in a regression analysis. |

| Transpose of X (X') | The transpose of the matrix X, where rows and columns are switched. |

| Inverse of X'X ((X'X)⁻¹) | The inverse of the matrix resulting from multiplying the transpose of X by X. |

| Residual Variance | The variance of the difference between the observed and predicted values in a regression model. |

Example of Beta Variance Calculator

Let’s go through an example to demonstrate how to use the Beta Variance Calculator.

Step 1: Gather the Required Values

Suppose you have the following values from your regression analysis:

- Variance of the Error Term (σ²): 4

- X'X: A matrix derived from your predictor variables. For simplicity, assume it is a 2x2 matrix with values [[10, 2], [2, 5]].

- (X'X)⁻¹: The inverse of the X'X matrix. Suppose it is [[0.1, -0.02], [-0.02, 0.25]].

Step 2: Calculate the Variance of the Beta Coefficient

Using the formula: Var(β) = σ² * (X'X)⁻¹

First, multiply each element of the inverse matrix by the variance of the error term: Var(β) = 4 * [[0.1, -0.02], [-0.02, 0.25]]

This results in: Var(β) = [[0.4, -0.08], [-0.08, 1.0]]

Thus, the variance of the Beta coefficients is represented by the matrix [[0.4, -0.08], [-0.08, 1.0]]. This matrix indicates the variances and covariances of the Beta coefficients, helping you assess their stability and reliability.

Most Common FAQs

Calculating the variance of Beta coefficients is crucial because it provides insights into the precision of the estimated parameters in a regression model. A lower variance indicates that the Beta coefficient is more stable and reliable, while a higher variance suggests greater uncertainty.

The variance of the error term directly impacts the variance of the Beta coefficients. A higher error variance leads to a higher variance of the Beta coefficients, indicating that the predictor variables may have less consistent effects on the dependent variable.

The Beta Variance Calculator is typically use for linear regression models. However, the principles can be extend to other types of regression models, provided the appropriate adjustments are made for the specific model's structure.