Amdahl's Law, named after computer architect Gene Amdahl, is used to find the maximum improvement possible by enhancing a particular part of a system. In computing, it addresses the impact of moving from single-processor to multi-processor computing systems, providing a crucial metric for understanding the benefits of parallelization.

Formula of Amdahl’s Law Calculator

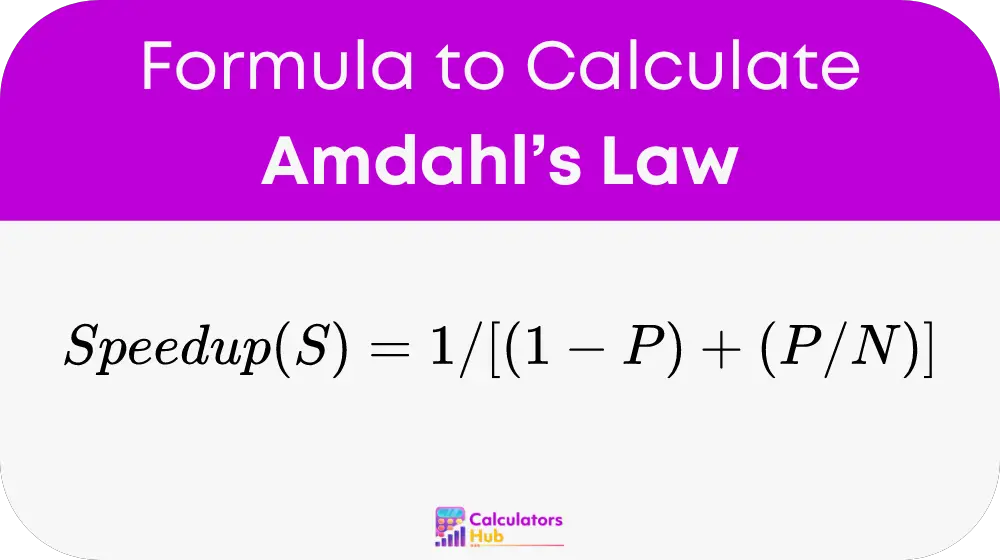

The Amdahl’s Law formula to calculate the speedup (S) of a task is given by:

Where:

- S is the speedup of the task.

- P is the proportion of the task that can be parallelized (a value between 0 and 1).

- N is the number of processors or execution units.

Detailed Components:

- (1 - P) represents the portion of the task that must be execute serially and cannot be parallelize.

- (P / N) represents the portion of the task that can be parallelize and is divide among the N processors.

This formula helps determine the theoretical maximum speedup using parallel processing within the constraints of the task’s parallelizability.

Table for General Terms

| Term | Definition |

|---|---|

| Speedup (S) | The factor by which a system’s performance improves |

| P (Proportion) | The fraction of a task that can be parallelized |

| N (Processors) | The number of processors used in parallelization |

| Serial | Task components that cannot be parallelized |

Example of Amdahl’s Law Calculator

Consider a task where 70% of the operations can be parallelize (P = 0.7), and it is being execute on a system with 10 processors (N = 10):

Speedup (S) = 1 / [(1 - 0.7) + (0.7 / 10)] = 1 / [0.3 + 0.07] = 1 / 0.37 ≈ 2.7

This example shows how the Amdahl's Law Calculator can be use to estimate the speedup from parallel processing.

Most Common FAQs

Amdahl's Law assumes infinite workload and does not account for overheads related to parallel processing such as communication and synchronization.

While Amdahl’s Law provides a theoretical maximum speedup, the actual applicability may vary depending on the software’s ability to be effectively parallelized.

Increasing the number of processors generally offers greater potential speedup, but the benefits diminish as the proportion of the task that can be parallelized decreases.