This specialized tool aids researchers and statisticians by providing a standardized measure of agreement between raters beyond chance. It is particularly useful in fields like psychology, medicine, and any research involving observational data.

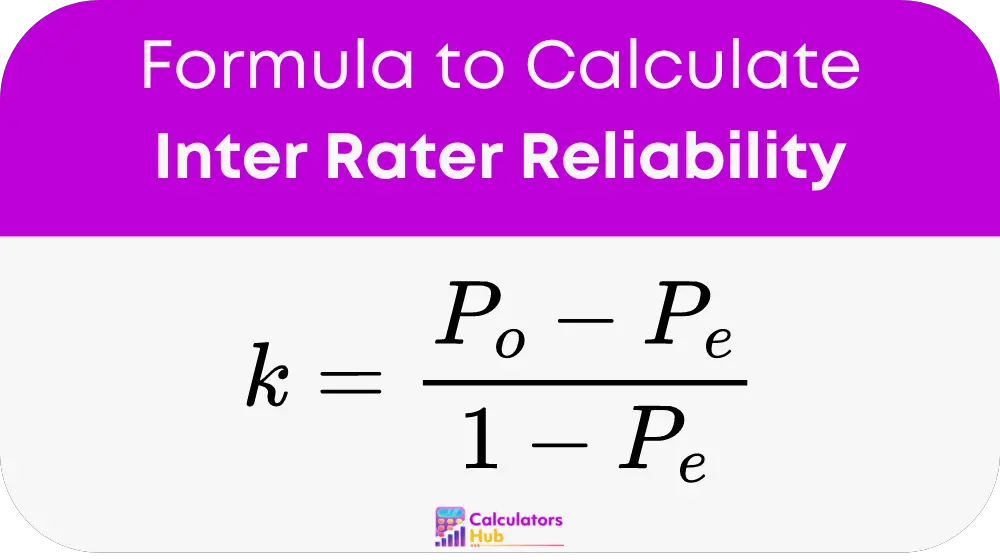

Formula of Inter Rater Reliability Calculator

The key formula used in measuring inter-rater reliability is Cohen’s Kappa (k), which quantifies the level of agreement between two raters. The formula is:

Where:

- P_o is the observed agreement among raters.

- P_e is the expected agreement by chance.

Step-by-Step Guide to Using the Inter Rater Reliability Calculator

Construct a Contingency Table:

Create a table to record different ratings assigned by two raters. For example:

| Rater B: Yes | Rater B: No | |

|---|---|---|

| Rater A: Yes | a | b |

| Rater A: No | c | d |

Calculate Observed Agreement (P_o):

P_o = (a + d) / (a + b + c + d)

Calculate Expected Agreement (P_e):

P_e = ((a + b) * (a + c) + (c + d) * (b + d)) / (a + b + c + d)^2

Compute Cohen’s Kappa:

Using the values from above, substitute into the Cohen’s Kappa formula to obtain the result.

Table of General Terms and Relevant Calculations

| Term | Definition |

|---|---|

| Inter-Rater Reliability | A measure of how consistently different raters evaluate the same phenomena. |

| Cohen’s Kappa (k) | A statistic that measures inter-rater reliability for categorical items. |

| Observed Agreement (P_o) | The proportion of times that raters agree. |

| Expected Agreement (P_e) | The probability that raters agree by chance. |

| Contingency Table | A matrix used to calculate the agreements and disagreements between raters. |

This table provides a quick reference to understand the key terms and calculations used when evaluating inter-rater reliability, assisting in comprehending the methodological approach of the reliability calculator.

Example of Inter Rater Reliability Calculator

Scenario:

Two psychologists are assessing a group of patients for a specific psychological condition, where they must classify each patient as either ‘High risk’ or ‘Low risk’. After evaluating 10 patients, they recorded their assessments as follows:

- Rater A: High Risk: 5 patients, Low Risk: 5 patients

- Rater B: High Risk: 4 patients, Low Risk: 6 patients

Their assessments are specifically:

| Patient ID | Rater A Assessment | Rater B Assessment |

|---|---|---|

| 1 | High Risk | High Risk |

| 2 | Low Risk | Low Risk |

| 3 | High Risk | Low Risk |

| 4 | Low Risk | Low Risk |

| 5 | High Risk | High Risk |

| 6 | Low Risk | High Risk |

| 7 | High Risk | High Risk |

| 8 | Low Risk | Low Risk |

| 9 | High Risk | Low Risk |

| 10 | Low Risk | Low Risk |

Calculations:

Construct a Contingency Table:

| Rater B: High Risk | Rater B: Low Risk | |

|---|---|---|

| Rater A: High Risk | 3 (a) | 2 (b) |

| Rater A: Low Risk | 1 (c) | 4 (d) |

Calculate Observed Agreement (P_o):

P_o = (a + d) / (a + b + c + d) = (3 + 4) / 10 = 0.7

Calculate Expected Agreement (P_e):

P_e = [((a + b) * (a + c) + (c + d) * (b + d)) / (a + b + c + d)^2]

= [((3 + 2) * (3 + 1) + (1 + 4) * (2 + 4)) / 10^2]

= [20 + 30] / 100 = 0.5

Compute Cohen’s Kappa:

k = (P_o – P_e) / (1 – P_e) = (0.7 – 0.5) / (1 – 0.5) = 0.4

This Cohen’s Kappa value of 0.4 suggests a moderate agreement beyond chance between the two raters.

Most Common FAQs

It measures the agreement among different raters, ensuring that assessments are consistent across different observers.

Cohen’s Kappa considers the agreement occurring by chance, providing a more accurate measure of reliability than simple percent agreement.