The Bellman Equation Calculator is a specialized tool that computes the optimal value of a decision-making process in a dynamic programming context. It is most commonly used in the fields of reinforcement learning, economics, and operations research. The Bellman equation itself is a recursive formula that breaks down complex decision-making problems into simpler sub-problems, allowing for the calculation of the maximum possible reward in a given state. This equation forms the backbone of many algorithms used to solve Markov Decision Processes (MDPs), which are models used to describe a system where outcomes are partly random and partly under the control of a decision-maker.

The calculator simplifies the process of finding the optimal strategy by automatically performing the necessary calculations. By inputting the relevant parameters, such as the reward function, transition probabilities, and discount factor, users can quickly determine the value of a given state, which in turn informs the best possible action to take in that state. This tool is invaluable for those working on optimization problems in various fields, enabling them to make more informed and strategic decisions.

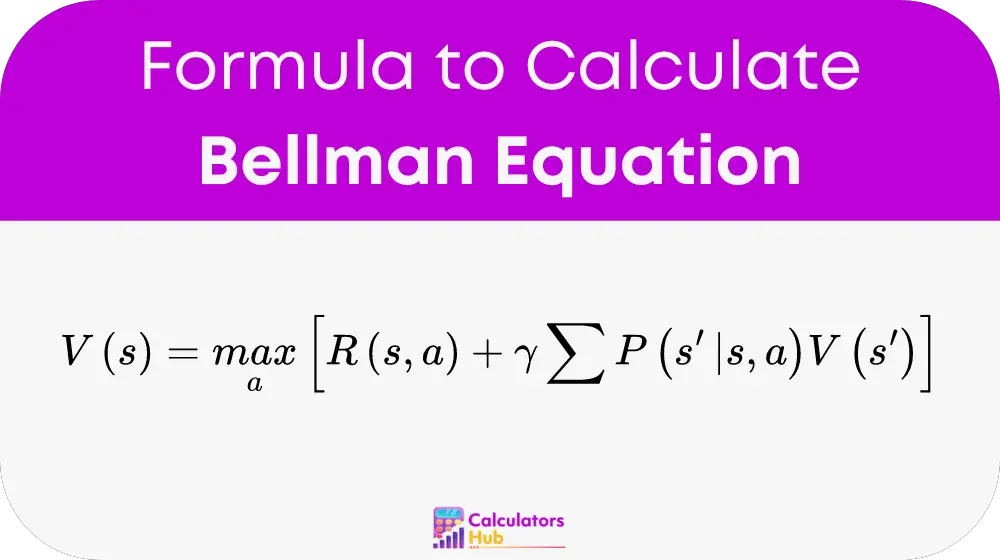

Formula of Bellman Equation Calculator

The Bellman equation is represented as:

Where:

- V(s) is the value of state s.

- max_a represents taking the maximum over all possible actions a.

- R(s, a) is the immediate reward for taking action a in state s.

- γ is the discount factor, which determines the importance of future rewards.

- Σ represents the sum over all possible next states s’.

- P(s’|s, a) is the probability of transitioning to state s’ from state s after taking action a.

- V(s’) is the value of the next state s’.

This formula is central to dynamic programming and is used to determine the value function, which is essential in decision-making processes where outcomes are uncertain.

Useful Conversion Table

For those who frequently work with dynamic programming and the Bellman equation, the following table offers quick reference values for common terms and conversions. This can save time and improve accuracy when working with the Bellman Equation Calculator.

| Term | Description | Common Values |

|---|---|---|

| Discount Factor (γ) | Determines how future rewards are valued compared to immediate rewards. | 0.9, 0.95, 0.99 |

| Immediate Reward (R) | The reward received after taking an action in a given state. | Varies based on the context |

| Transition Probability (P) | The likelihood of moving from one state to another after an action. | 0.1, 0.5, 0.9 |

| Value of State (V) | The long-term value of being in a particular state. | Calculated using the formula |

These values are commonly use in various applications of the Bellman equation, and having them readily available can greatly enhance efficiency.

Example of Bellman Equation Calculator

Consider a scenario where an agent needs to decide between two actions in a particular state. The immediate rewards and probabilities for transitioning to new states are as follows:

- Action A: Immediate reward = 10, Transition to State 1 with probability 0.7, Transition to State 2 with probability 0.3

- Action B: Immediate reward = 5, Transition to State 1 with probability 0.4, Transition to State 2 with probability 0.6

- Discount factor (γ) = 0.95

- Value of State 1 (V1) = 50

- Value of State 2 (V2) = 30

Using the Bellman equation:

- For Action A:V(s) = 10 + 0.95 * [0.7 * 50 + 0.3 * 30] = 10 + 0.95 * [35 + 9] = 10 + 41.8 = 51.8

- For Action B:V(s) = 5 + 0.95 * [0.4 * 50 + 0.6 * 30] = 5 + 0.95 * [20 + 18] = 5 + 36.1 = 41.1

In this case, Action A has a higher value (51.8) compared to Action B (41.1), indicating that Action A is the optimal choice in this scenario.

Most Common FAQs

The Bellman equation is use to determine the optimal strategy in decision-making processes where the outcome is partly random and partly under the control of the decision-maker. It is fundamental in dynamic programming and reinforcement learning.

The discount factor (γ) determines the importance of future rewards compared to immediate rewards. A higher discount factor places more value on future rewards, while a lower discount factor prioritizes immediate rewards.

Yes, the Bellman equation is widely use in fields such as economics, robotics, and artificial intelligence to optimize decision-making processes in environments where outcomes are uncertain.